Data Validation Of My Interview Dataset Using Goodtables

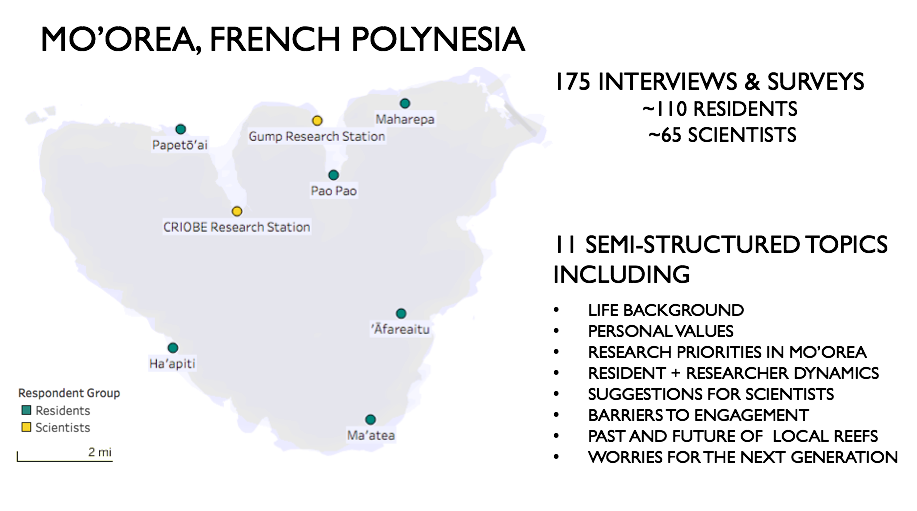

I used goodtables to validate the interview data we gathered as part of the first chapter of my PhD. These data were collected in Mo'orea, French Polynesia where we interviewed both residents and scientists regarding the future of research in Mo'orea.

The dock of Gump Research Station in Mo'orea, French Polynesia.

As an aspiring marine scientist, I initially wanted to help solve global environmental concerns by discovering objective truths. However, I've realized that to create lasting environmental solutions the notion of perceived truths is equally important and much less studied. Individuals’ subjective perceptions become their truths and these truths then define their actions and priorities. These, in turn, define our world.

In the face of climate change, coral reef ecosystems and the 500 million people who depend on them are increasingly at risk. While a vast majority of coral reef research funding is allocated towards understanding the ecological mechanisms that underlie reef health, the ability of a coral reef to recover from disturbances is often determined by human actions or inactions. Factors such as pollution, fishing intensity and tourism have significant impacts on local reefs. However, laws restricting these actions are often not abided by when the communities feel ill-informed and excluded from research or management.

Perceived lack of local involvement in foreign research can lead to governments creating strict legislation. For example, last year Indonesia created a law making it mandatory for foreign researchers to have local collaborators and for the work they do to benefit to Indonesia. These types of sanctions hold us- as foreign scientists, accountable to consider for whom research in the tropics is being done.

Amplifying local involvement and unifying the perspectives of researchers and coastal communities is critical not only in reducing inequity in science, but also in securing lasting coral reef health. Mo’orea, French Polynesia was the idea case study location to initially address these topics due to its two foreign research stations, colonial legacy and strong cultural and nutritional ties to the reef. We partnered with collaborators from the Atitia cultural center to interview local residents and scientists regarding 11 topics, including research topic priorities and as well as cataloged suggestions for scientists.

Interview sites in Mo'orea, French Polynesia.

To gather our data, we asked open ended questions, such as, “how would you describe the dynamics between the research stations and Mo'orea's’ residents? We then transcribed and categorized each respondents answers.

Researchers Francis Pun, Sylvie Tuahu, Terevatureiariki Atger and Tauira Pun and discuss survey alterations during the initial phase of the project .

A subset of this data is ready for analysis and had been uploaded as a csv file to a GitHub repository

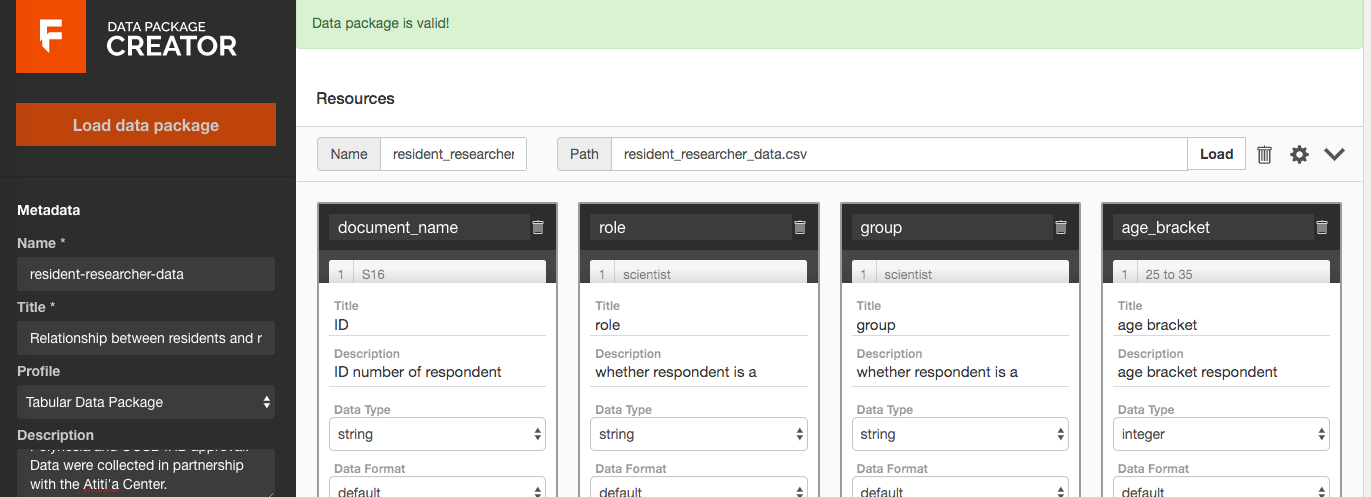

First I created a datapackage by uploading this csv file to Frictionless Data's Datapackage Creator. This tool helps articulate the data structure clearly to anyone who may be interested in it.

Creating a valid datapackage describing our dataset.

Goodtables

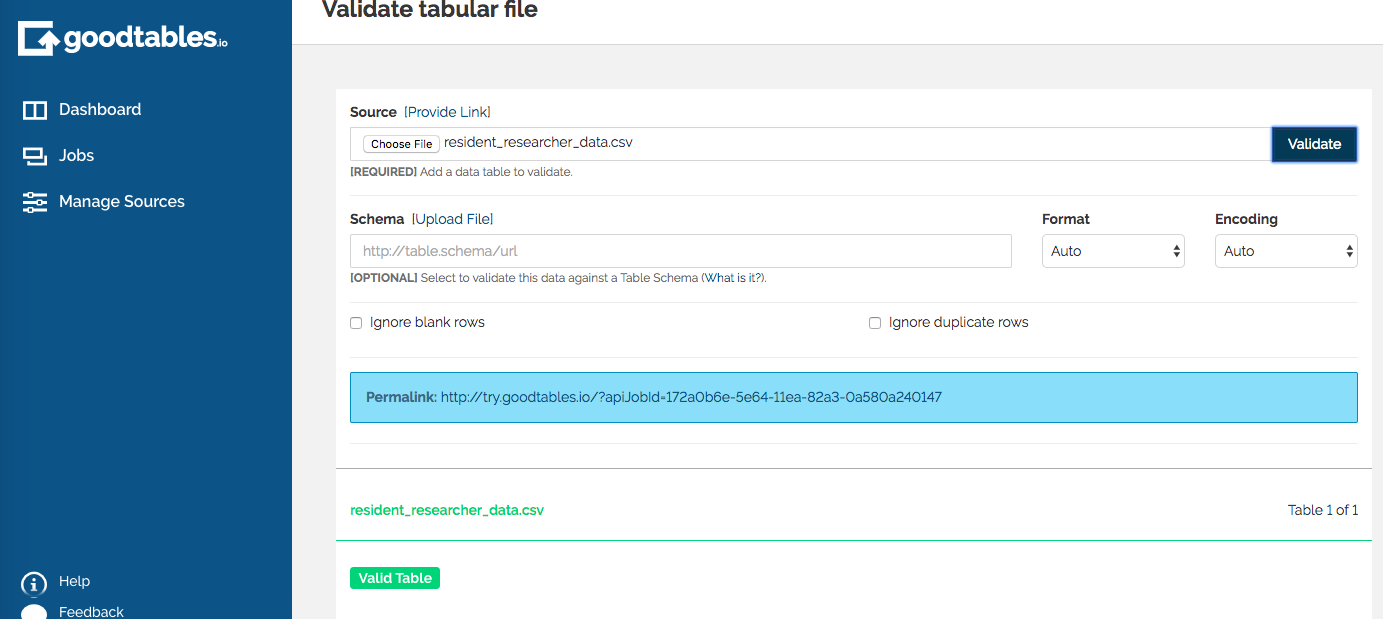

I first used Try.goodtables which allows for a quick check to see whether your tabular dataset is valid. It will let you know where there are errors within your dataset. However, if you edit the dataset you would need to upload or relink the file if you wanted to make sure the dataset was still valid.

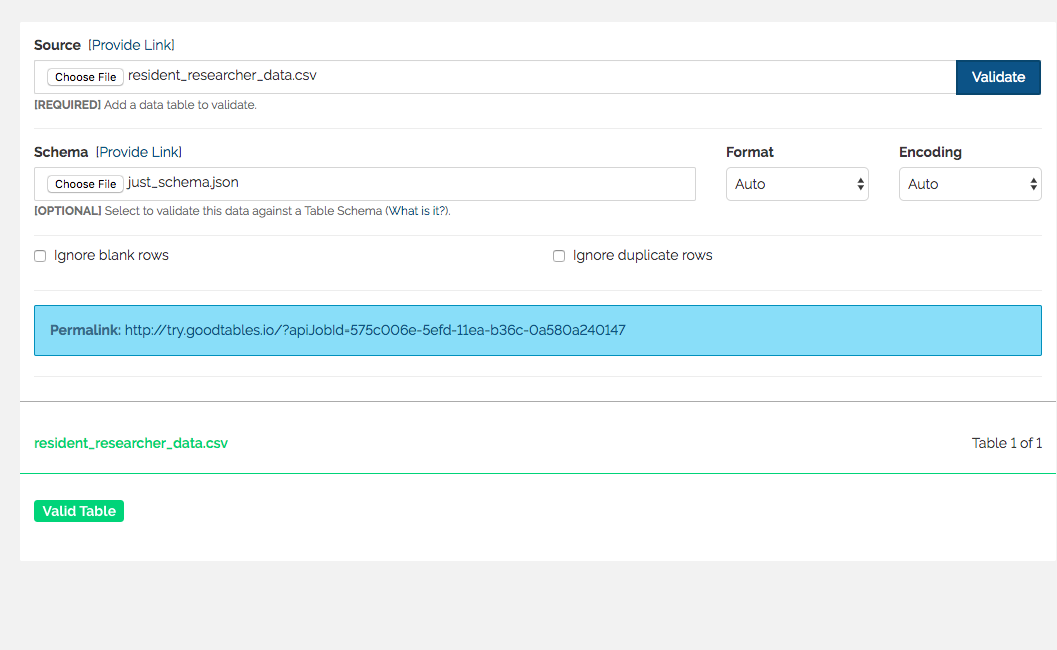

Checking for structural errors only

At first, I uploaded just my csv data file to check for structural errors.

With only the data file uploaded goodtables indicates that the structure is valid.

This means that my dataset doesn't have blank or duplicate rows and meets other structural criteria related to column headers are met and that all my rows have the same number of columns.

Checking for content errors as a secondary step

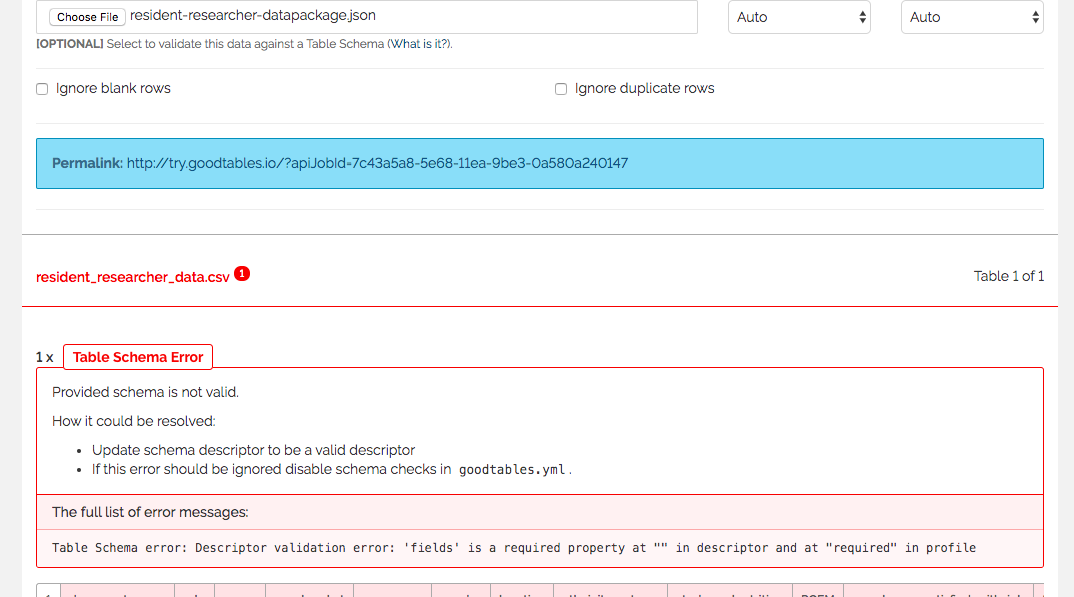

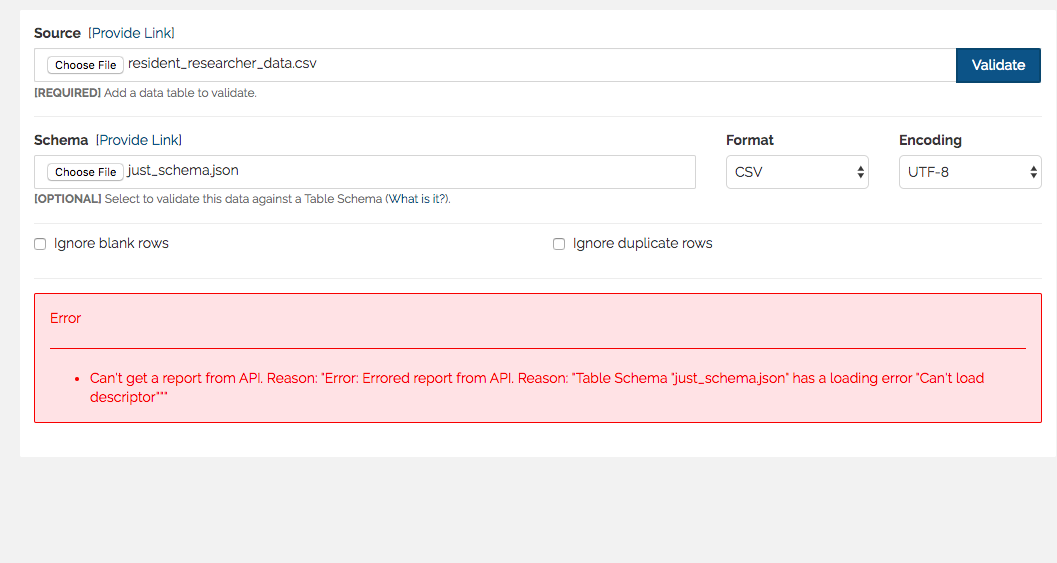

In order to check for content errors in my dataset I also have to upload the schema that accompanies it. The schema I created for this dataset using the datapackage creator tells you what data type is in the columns of my dataset. However at first I made the mistake of loading my datapackage json file directly into where you are suppose to load the schema.

Uploading my datapackage json file into the goodtables schema upload area was incorrect.

The above error occurs because the datapackage json file is more than just a schema. Instead I had to upload just the schema section of the json file (i.e. the fields section of the datapackage file) into the schema input area of the browser tool.

Correct upload of both csv data file and schema only json file.

If you go this route make sure that when you are copying over just the schema secton from the datapackage file that you include the { } brackets around the fields or you will get the following error:

The error message you will receive if you do not include outer brackets in the schema file.

Streamlined Data Validation

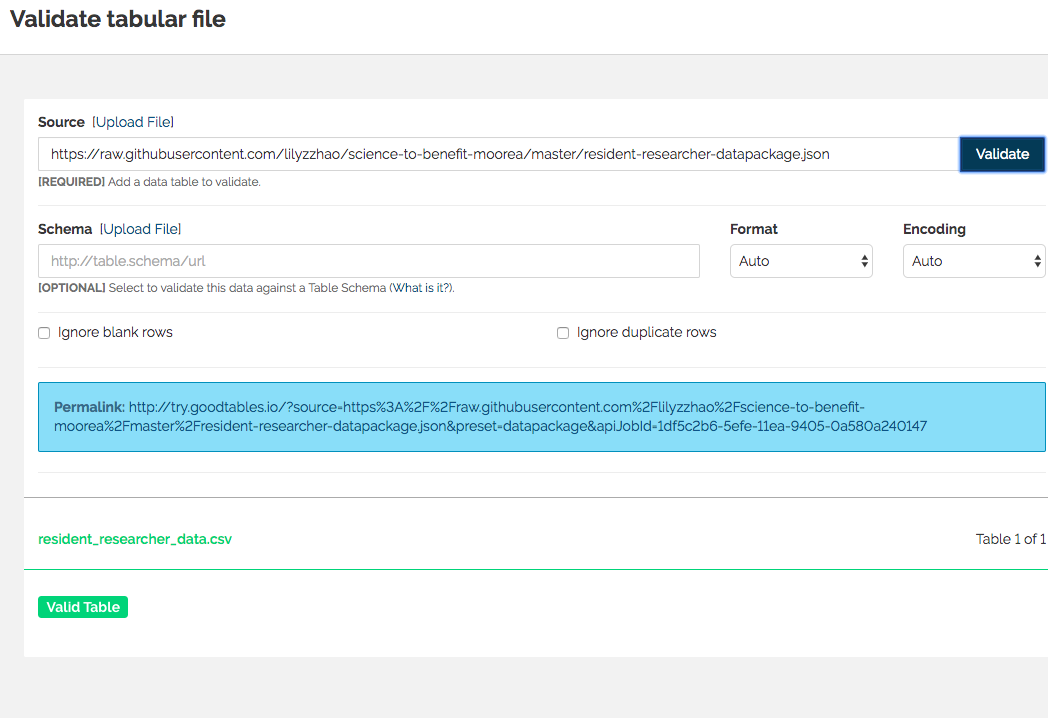

Instead of this multistep method the most efficient way to check you data is by uploading the url of your datapackage directly into the source input area of try.goodtables.io

Upload your datapackage json github url directly into goodtables for streamlined use.

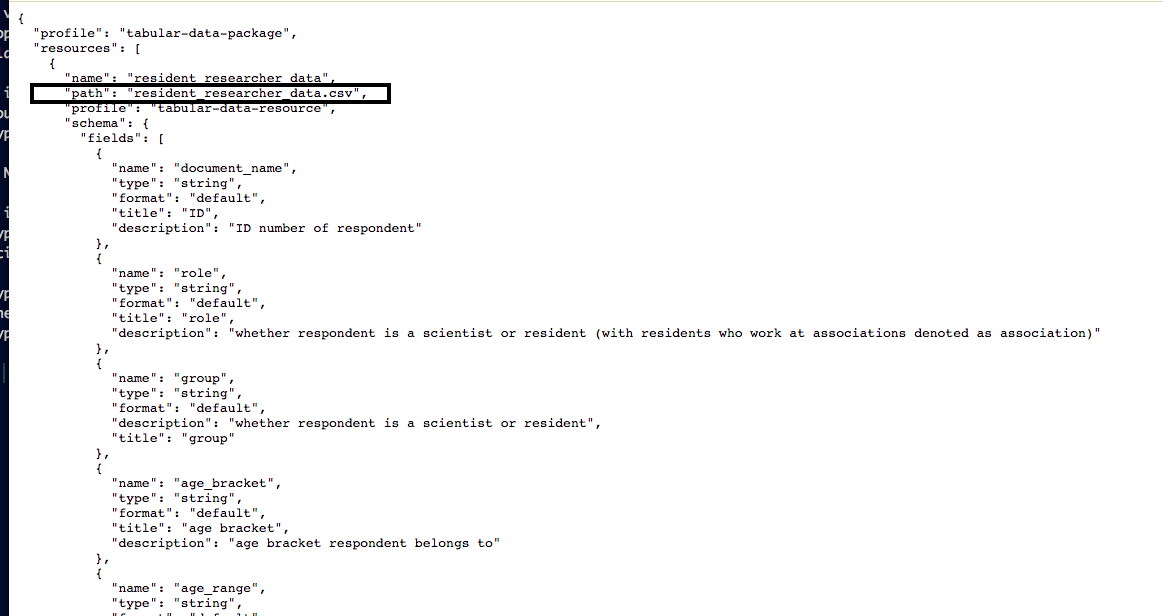

This works because the datapackage.json file includes the path to your csv file directly in it!

The path to my dataset appears in the raw datapackage json file allowing it to be uploaded into the source input area of try.goodtables.io.

Continous Data Validation

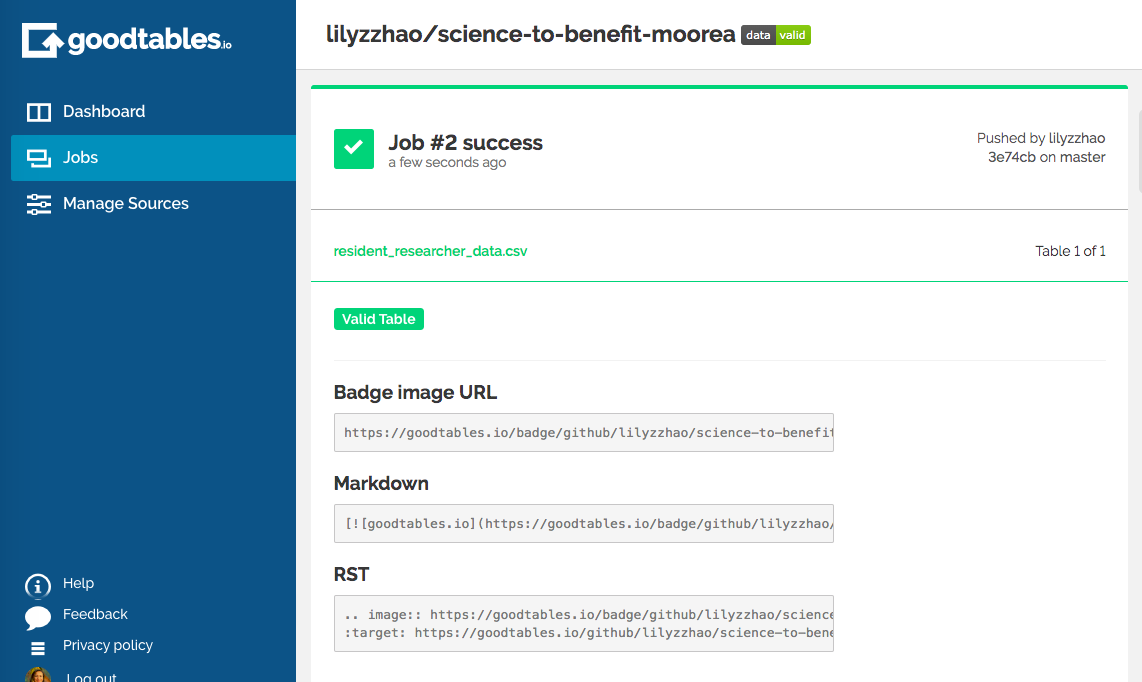

While try.goodtables.io allows you to quickly check the validality of your data, it does not allow for continous validation. As some datasets are frequently updated, continous validation is a wonderful feature. While I don't need continous validation for this dataset, I tested out this feature of goodtables by linking my GitHub repository to goodtables and selecting the repository of this project. It seems to be the most streamlined option of all!

Continous validation of the data for this project